Big-Time Data Terminology

The term "big data" is so broad that when commenting about data management and the industry, it's better to consider topics such as data quality, data consistency or deriving value from data – or at least discuss matters in those terms.

A presentation given this past week by Pierre Feligioni, head of real-time data strategy at S&P Capital IQ, defined "big data" as "actionable data," and sought to portray big data concerns as really being about four issues: integration, technology, content and scalability.

Integration, particularly the centralization of reference data, is the biggest challenge for managing big data, as Feligioni sees it. While structured data is already quite "normalized," unstructured data, which can include messaging, emails, blogs and Twitter feeds, needs to be normalized.

Unstructured data is fueling rapid exponential growth in data volumes, justifying the name "big data." Data volumes are counted in terabytes (1,000 gigabytes), or even petabytes (1,000 terabytes). When it comes to unstructured data at those levels, central repositories that can collect and normalize data – and coordinate it with structured data – are a must, Feligioni contends.

Technology and scalability the building blocks necessary to make such central repositories functional, as he describes it. Natural language processing and semantic data approaches are also being applied. "The biggest challenge is understanding documents and creating analytics on top of this content, for the capability to make a decision to buy or sell," says Feligioni.

Scalability makes it possible to process more and more information, and is achieved through new resources, such as cloud computing, which carry their own issues and require additional decisions [as described in my column two weeks ago, "Cloud Choices"].

Everything that Feligioni calls part of "big data" actually revolves around getting higher quality data by incorporating more sources and checking them against each other to keep that data consistent. It's also about creating new value from data that can be acted upon by trading and investment operations professionals.

So, whatever buzzwords one uses, whether "big data" or sub-categories under that umbrella, what they are really talking about is quality, consistency and value. Other terms just describe the means.

Only users who have a paid subscription or are part of a corporate subscription are able to print or copy content.

To access these options, along with all other subscription benefits, please contact info@waterstechnology.com or view our subscription options here: https://subscriptions.waterstechnology.com/subscribe

You are currently unable to print this content. Please contact info@waterstechnology.com to find out more.

You are currently unable to copy this content. Please contact info@waterstechnology.com to find out more.

Copyright Infopro Digital Limited. All rights reserved.

As outlined in our terms and conditions, https://www.infopro-digital.com/terms-and-conditions/subscriptions/ (point 2.4), printing is limited to a single copy.

If you would like to purchase additional rights please email info@waterstechnology.com

Copyright Infopro Digital Limited. All rights reserved.

You may share this content using our article tools. As outlined in our terms and conditions, https://www.infopro-digital.com/terms-and-conditions/subscriptions/ (clause 2.4), an Authorised User may only make one copy of the materials for their own personal use. You must also comply with the restrictions in clause 2.5.

If you would like to purchase additional rights please email info@waterstechnology.com

More on Data Management

After Dora, ITRS pursues agentic AI for autonomous monitoring

Chief product officer says firms can bolster data resilience with new forms of AI.

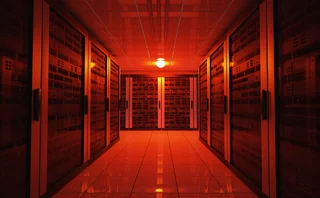

Geopolitics hits Middle East datacenters and firms’ operations

The IMD Wrap: Wei-Shen examines recent disruptions to AWS datacenters in the Middle East linked to the US-Israel strikes on Iran, and what it means for data and businesses operating in the region.

CME rankles market data users with licensing changes

The exchange began charging for historically free end-of-day data in 2025, angering some users.

Data heads scratch heads over data quality headwinds

Bank and asset manager execs say the pressure is on to build AI tools. They also say getting the data right is crucial, but not everyone appreciates that.

Reddit fills gaping maw left by Twitter in alt data market

The IMD Wrap: In 2021, Reddit was thrust into the spotlight when day traders used the site to squeeze hedge funds. Now, for Intercontinental Exchange, it is the new it-girl of alternative data.

Knowledge graphs, data quality, and reuse form Bloomberg’s AI strategy

Since 2023, Bloomberg has unveiled its internal LLM, BloombergGPT, and added an array of AI-powered tools to the Terminal. As banks and asset managers explore generative and agentic AI, what lessons can be learned from a massive tech and data provider?

ICE launches Polymarket tool, Broadridge buys CQG, and more

The Waters Cooler: Deutsche Börse acquires remaining stake in ISS Stoxx, Etrading bids for EU derivatives tape, Lofthouse is out at ASX, and more in this week’s news roundup.

Fidelity expands open-source ambitions as attitudes and key players shift

Waters Wrap: Fidelity Investments is deepening its partnership with Finos, which Anthony says hints at wider changes in the world of tech development.