Utilities: A New Approach to Reference Data Management

A WatersTechnology survey reveals the industry's opinions on reference data utilities.

Today’s financial firms must collect, process and manage an increasing amount of information, often using outdated legacy systems and duplicative processes. The handling of data is also now governed by stricter reporting requirements mandated by global regulators in the wake of the financial crisis. As a result, market participants are looking for solutions that will help alleviate the cost of modernizing legacy systems to handle current data-related issues. According to a recent whitepaper published by WatersTechnology and sponsored by SmartStream Technologies, more than one-third of market participants polled believe shared service utilities could be the best solution.

The whitepaper, A New Approach: Assessing Data Management Practices and Current Demand for a Reference Data Utility, looked at the concept of the ideal reference data utility. It was based on a survey of data operations professionals conducted in August 2015 that examined the issues currently driving financial firms’ reference data strategies, as well as what a reference data utility should offer to the industry, and market participants’ expectations for an industry-led utility.

According to the whitepaper, when it comes to reference data management, more than half of market participants want to optimize their trading capabilities with access to quality, timely reference data. And more than one-third of survey respondents believe the use of shared service utilities can alleviate the costs of modernizing legacy systems.

Given the increasing number of rules and regulations that financial firms have found themselves subjected to in recent years, there is a growing need for order and transparency when it comes to managing the data that flows through these organizations. This need has been compounded by the surge in the volume of information that organizations must manage due to the rise of the internet, social media and the expansion of financial markets into new regions, instruments and ideas. Financial firms need a robust, compliant, resource-efficient way to collect, analyze, manage and maintain this data. The trend towards siloed systems and processes in the past has meant that many firms house vast—sometimes disconnected—data infrastructures. As organizations try to cut costs, reduce headcount and tighten technology systems in response to market conditions, there is a growing realization that reference data management represents a cost center that can be tweaked or even wholly outsourced to alleviate the strain of regulation and budgetary constraints.

The whitepaper discusses the introduction of reference data utilities as a way to outsource some of the activity surrounding the collection and management of the data a financial firm needs to survive and compete in today’s markets. By providing users with access to data that has already been collected, normalized, cleansed and enriched, utilities remove some of this data management burden. Using information gathered from all users, they can create a reference data master copy that can be fed back to the entire client base. By performing the necessary data management tasks once and feeding the results to all users, utilities eliminate duplicative actions, reducing costs for users, and eliminating the risks inherent in legacy systems. To satisfy the concerns of those organizations that wish to maintain some level of control over their data management strategies, flexibility and customization should be a key component of the reference data utility offering.

By investigating what market participants want from their own reference data management strategies and how they believe a reference data utility should address these needs, the whitepaper provides insight into current data management trends, concerns and demands. So, how can professionals push their organizations to use data in the most effective way, and what tools are available to make this happen?

Current Climate

The warren of siloed systems and processes that have evolved over decades within many financial firms has led to situations where the same data is collected, managed, and analyzed at many different points throughout the organizational structure. In addition to the unnecessary costs of such duplicative activities, a lack of automation means simple mistakes can and often do pollute the entire transaction chain. As such, many financial firms now recognize the need to upgrade these legacy systems. The added pressure of demands from global regulators for greater transparency after the financial crisis has only emphasized the need for a new approach to data management.

In the current market, financial firms want to find a way to standardize the information they collect, produce and use in order to comply with these reporting standards, as well as reduce costs and increase efficiency. According to the whitepaper, 36.6 percent of data operations professionals believe a shared service utility can alleviate the cost of modernizing legacy systems and eliminate the risks of internal manual processes. Are reference data utilities the solution financial firms are looking for?

What is a Utility?

Reference data utilities centralize and mutualize the collection, normalization, cleansing, and enrichment of data. This information is then fed to organizations across the industry and integrated into their current systems. As such, utilities provide a fast, flexible, cost-efficient way for financial firms to access a clean feed of standardized reference data. The mutualized process means that the utility relies on its client base to contribute data and provide feedback. This raises the bar in terms of excellence, according to the whitepaper: “By contributing data, users increase the quality of the information disseminated by the utility, while also benefiting from the contributions made by every other user.” This means data quality improves, while the cost associated with achieving that quality falls. As a result, a data utility can target a key area of demand in the market—as the whitepaper shows, financial firms want to find a way to “both reduce operational costs and mitigate the risks associated with data governance and legacy integration.”

According to the whitepaper, it is important for organizations in today’s financial markets to have access to data utilities “that can process data from a range of data providers, are vendor-neutral, and are generally considered to be trustworthy organizations that adhere to users’ requirements.” It notes that there are several types of organization already operating in the market that could provide the necessary level of service, including exchanges, data repositories and securities services providers. However, a key characteristic of any utility is that it is industry-led and therefore able to use customer feedback to create and apply standards that will satisfy industry demand for accurate, timely data via a robust but flexible infrastructure.

Collaboration is Key

For one firm working alone, reversing years of overlapping systems and redundant processes can be a daunting task. However, the use of a reference data utility provides a way for organizations to optimize their current data management infrastructure and gain a vital injection of clean data for use on an enterprise-wide basis.

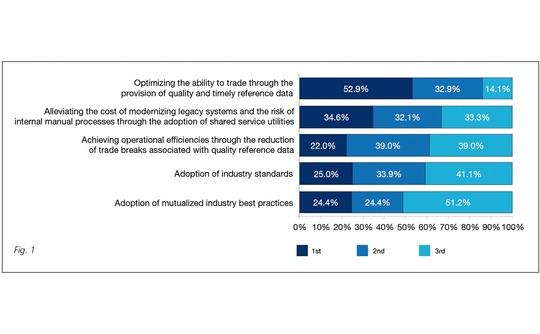

The survey results show that the needs and concerns of industry participants currently align very closely with the services that a reference data utility can offer. In addition to needing access to timely, quality reference data and cutting costs while mitigating the risks of manual processes, the whitepaper demonstrates that data management professionals prioritize the adoption of industry standards (25 percent) and mutualized industry best practices (24.4 percent), while increasing operational efficiency and reducing trade breaks is a key concern for 22 percent when devising a reference data strategy (see figure 1).

Cutting Costs

A major area of interest for market participants surveyed for the whitepaper was the ever-present need for cost efficiency, particularly in the post-financial crisis world. Indeed, this aspect of demand is unsurprising, given the current low-return, regulation-heavy environment in which these organizations must operate. According to the whitepaper, 30.1 percent of survey participants would hand over the majority of their organization’s data management needs to a utility in order to benefit from cost-efficiencies. A further 34.6 percent “believe a shared service utility can alleviate the cost of modernizing legacy systems and eliminate the risks of internal manual processes.”

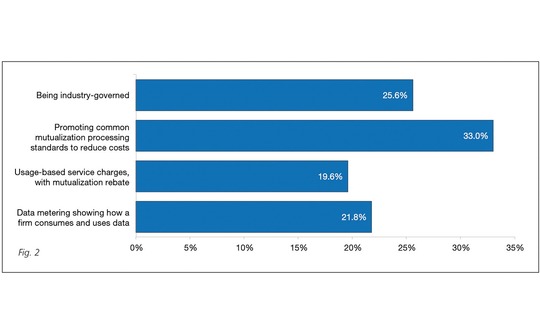

Mutualization is the route by which reference data utilities can offer these cost efficiencies. The collaborative model used by a utility allows it to import data from industry users to cleanse, package and deliver it back to market participants. Thus, the utility centralizes the relevant data management activities, performing them once, rather than each individual organization gathering and managing the same datasets for their own use. Mutualization reduces costs and increases quality since all players provide input and feedback to the utility, and so have a stake in maintaining the standard of information used. The whitepaper points out that 25.6 percent of respondents to the survey believe the ideal utility would be industry-led in this way, while 33 percent see the most important role of a reference data utility as “promoting common mutualization standards that will reduce costs.” (see figure 2).

Beyond Compliance

Reference data utilities are also well placed to support financial firms’ growing compliance burdens. Global regulators are currently hammering out a long list of reporting requirements. Under the European Market Infrastructure Regulation (EMIR) and the US Dodd-Frank Wall Street Reform and Consumer Protection Act, for example, firms must adhere to strict rules for recording and reporting transactions. As such, it makes sense for these organizations to create a system whereby transactions and counterparties are easily identified and recorded.

The whitepaper notes that reference data utilities can play a key role in such regulatory initiatives by applying data integrity to support reporting efforts. However, it also notes that flexibility is key to ensuring that the utility can address future changes to regulations. In this respect, the use of consumer feedback to create and apply standards will be crucial, underlining the need for an industry-led effort.

Given the weight of compliance pressure to which financial firms are already subject, the temptation might be to create data management strategies with the sole aim of ticking regulatory boxes.

However, tightening data management standards to improve quality and enrich data should actually also bolster firms’ bottom lines. As the whitepaper points out, “access to this data also allows financial firms to differentiate themselves by making investment decisions based on unique market insight.”

Indeed, in addition to supporting financial firms’ efforts to meet new regulatory reporting requirements, the whitepaper shows data management professionals expect reference data utilities to offer a range of services. Nearly half (47.1 percent) of respondents said utilities must be able to supplement and enrich data sources to serve business-critical processes. Nearly one-third (31.3 percent) said they expect a utility to monitor corporate actions and apply any changes to the data, while slightly more (32.5 percent) prioritize access to additional market information from primary market data. While less important, managing data licensing controls (24.6 percent) and preventing the commingling of customer and vendor data (22 percent) are additional issues that remain on the radars of data professionals assessing what a utility could and should offer a financial organization.

Using a Utility

The end result of using a utility should be that the cost of collecting and maintaining accurate, timely reference data decreases, while quality increases. Utilities are able to collect, normalize, cleanse, enrich and integrate reference data from a wide range of sources, feeding it to user-organizations across the industry. All users contribute data and also benefit from the other users’ data, increasing the quality of the information available.

This mutualization aspect ensures timely access to high-quality data. As such, the utility allows organizations to both reduce operational costs and mitigate the risks that are currently associated with data governance and legacy integration.

According to survey participants, utilities need to prioritize data quality and speed issues—a task that has become increasingly difficult for financial organizations to tackle alone in recent years, as the volume of data produced and used by such firms has exploded. Firms continue to use outdated systems to process this information, which means they often make mistakes, which may be repeated across the industry.

Market participants need a way to simplify and strengthen the process of data collection and management, while also cutting costs. The whitepaper demonstrates that the benefits of using a utility, such as access to timely, accurate data and the ability to reduce data management-related costs, have convinced many financial firms of the advantages that can be gained from integrating the services of a reference data utility.

________________________________________

The Ideal Reference Data Utility

A whitepaper published in September 2015 by WatersTechnology and sponsored by SmartStream Technologies, examines current attitudes towards data management and reference data utilities in the financial services industry.

The whitepaper, A New Approach: Assessing Data Management Practices and Current Demand for a Reference Data Utility, use a survey of data operations professionals conducted the previous month to explore the concept of the ideal reference data utility. It examines the issues that currently drive financial firms’ reference data strategies, what a reference data utility should offer to the industry, and market participants’ expectations for an industry-led utility.

Key findings from the whitepaper:

- Nearly half (46.2 percent) of respondents to the survey believe solving issues relating to data quality and timeliness should be the top priority for an industry-led reference data utility.

- More than half (52.9 percent) identify the main driver behind their organization’s reference data strategy as “optimizing the ability to trade through the provision of quality and timely reference data.”

- Of data professionals polled, 34.6 percent believe a shared service utility can alleviate the cost of modernizing legacy systems and eliminate the risks of internal manual processes.

- Nearly one-third (30.1 percent) of respondents would like an industry-led reference data utility to handle the majority of their organization’s data management needs due to the cost-effectiveness of the mutualization process.

- Financial firms expect utilities to: provide the ability to supplement and enrich data sources to serve business critical processes (47.1 percent); monitor corporate actions and apply relevant reference data changes (31.3 percent); provide access to additional market information from primary market data (32.5 percent); manage data licensing controls (24.6 percent); and prevent commingling of customer and vendor data (22 percent).

- One-third of those polled see the most important role of a reference data utility as promoting common mutualization standards that will reduce costs.

Only users who have a paid subscription or are part of a corporate subscription are able to print or copy content.

To access these options, along with all other subscription benefits, please contact info@waterstechnology.com or view our subscription options here: https://subscriptions.waterstechnology.com/subscribe

You are currently unable to print this content. Please contact info@waterstechnology.com to find out more.

You are currently unable to copy this content. Please contact info@waterstechnology.com to find out more.

Copyright Infopro Digital Limited. All rights reserved.

As outlined in our terms and conditions, https://www.infopro-digital.com/terms-and-conditions/subscriptions/ (point 2.4), printing is limited to a single copy.

If you would like to purchase additional rights please email info@waterstechnology.com

Copyright Infopro Digital Limited. All rights reserved.

You may share this content using our article tools. As outlined in our terms and conditions, https://www.infopro-digital.com/terms-and-conditions/subscriptions/ (clause 2.4), an Authorised User may only make one copy of the materials for their own personal use. You must also comply with the restrictions in clause 2.5.

If you would like to purchase additional rights please email info@waterstechnology.com

More on Data Management

AI strategies could be pulling money into the data office

Benchmarking: As firms formalize AI strategies, some data offices are gaining attention and budget.

Identity resolution is key to future of tokenization

Firms should think not only about tokenization’s potential but also the underlying infrastructure and identity resolution, writes Cusip Global Services’ Matthew Bastian in this guest column.

Vendors are winning the AI buy-vs-build debate

Benchmarking: Most firms say proprietary LLM tools make up less than half of their AI capabilities as they revaluate earlier bets on building in-house.

Private markets boom exposes data weak points

As allocations to private market assets grow and are increasingly managed together with public market assets, firms need systems that enable different data types to coexist, says GoldenSource’s James Corrigan.

Banks hate data lineage, but regulators keep demanding it

Benchmarking: As firms automate regulatory reporting, a key BCBS 239 requirement is falling behind, raising questions about how much lineage banks really need.

TMX eyes global expansion in 2026 through data offering

The exchange operator bought Verity last fall in an expansion of its Datalinx business with a goal of growing it presence outside of Canada.

AI-driven infused reasoning set to democratize the capital markets

AI is reshaping how market participants interact with data, lowering barriers to entry and redefining what is possible when insight is generated at the same pace as the markets.

Fintechs grapple with how to enter Middle East markets

Intense relationship building, lack of data standards, and murky but improving market structure all await tech firms hoping to capitalize on the region’s growth.